We compared six vision models on two tasks: describing images and extracting text. Below are short results and final rankings.

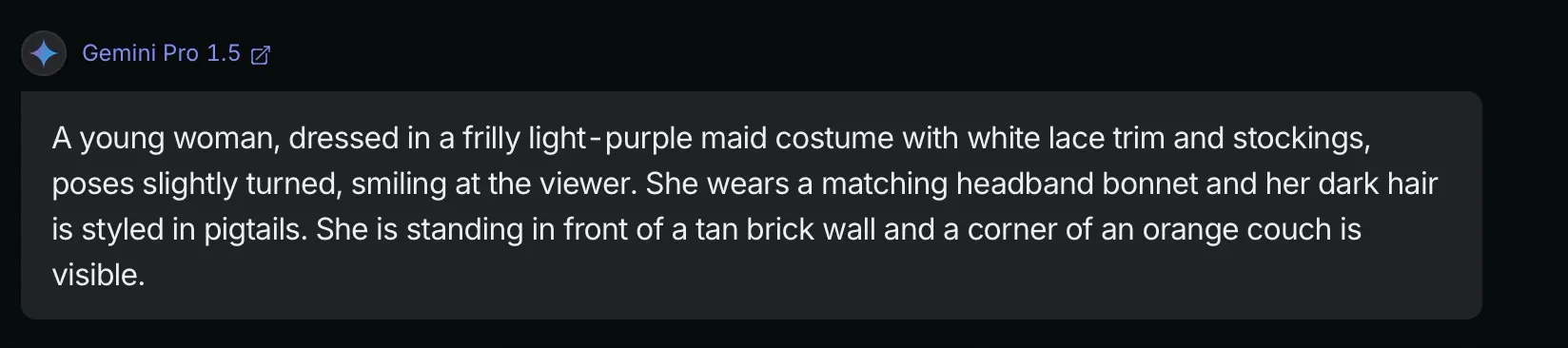

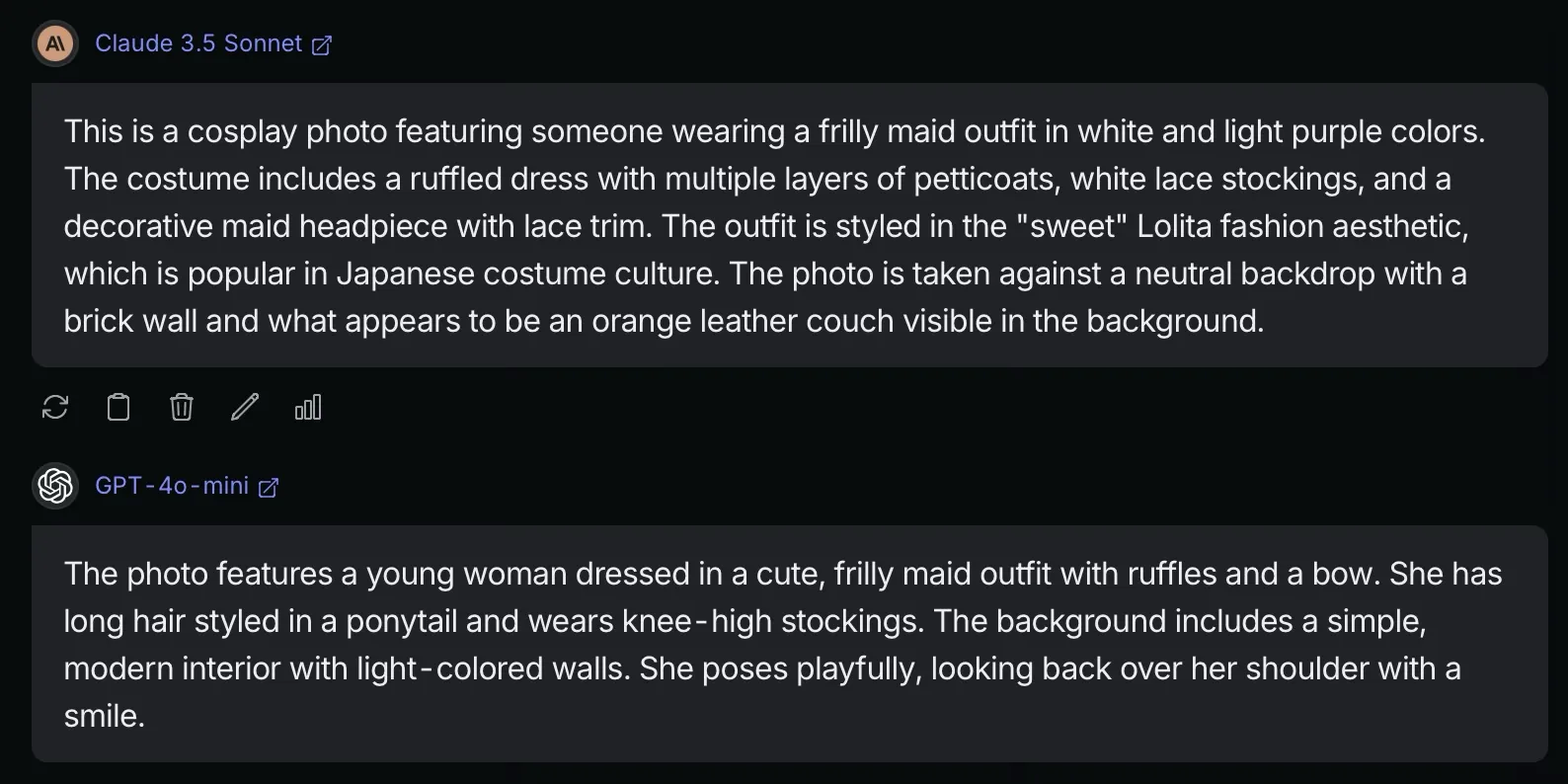

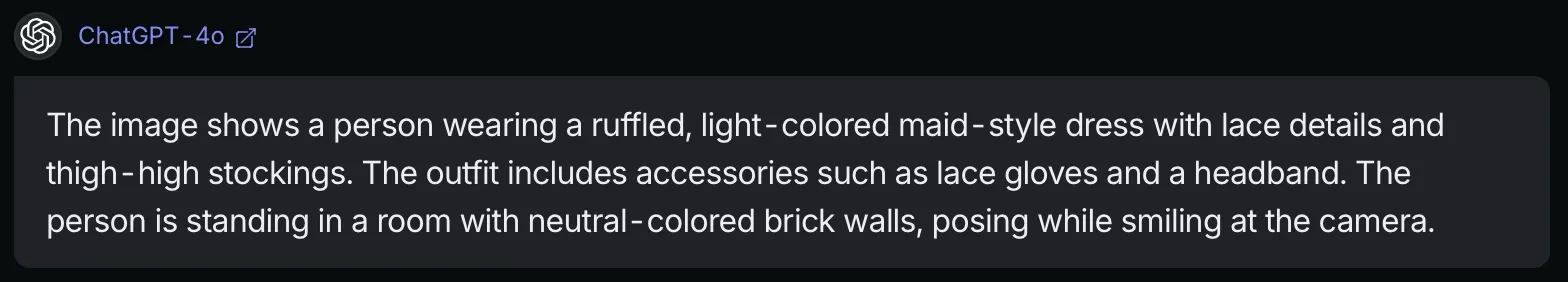

Question 1: Image Recognition — concise descriptions

Ranking

- Gemini Pro 1.5 — accurate, concise, well‑phrased.

- Gemini Flash 1.5 — clear and reliable; slightly less polished.

- ChatGPT‑4o — detailed and correct; wording can be wordy.

- Claude 3.5 Sonnet — thorough but tends to over‑explain.

- GPT‑4o mini — solid basics; lighter on fine details.

- Claude 3.5 Haiku — misinterpreted images in this test.

Question 2: Text Extraction — exact match

Target quote:

“You can’t connect the dots looking forward; you can only connect them looking backwards. So you have to trust that the dots will somehow connect in your future.”

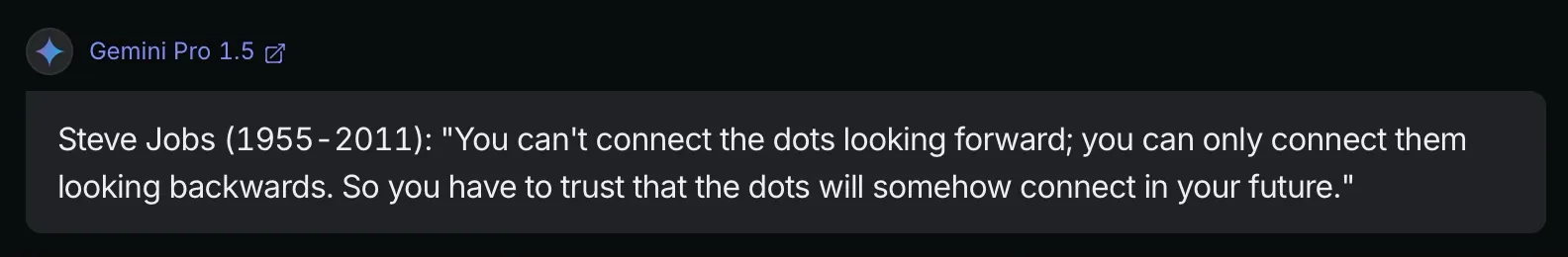

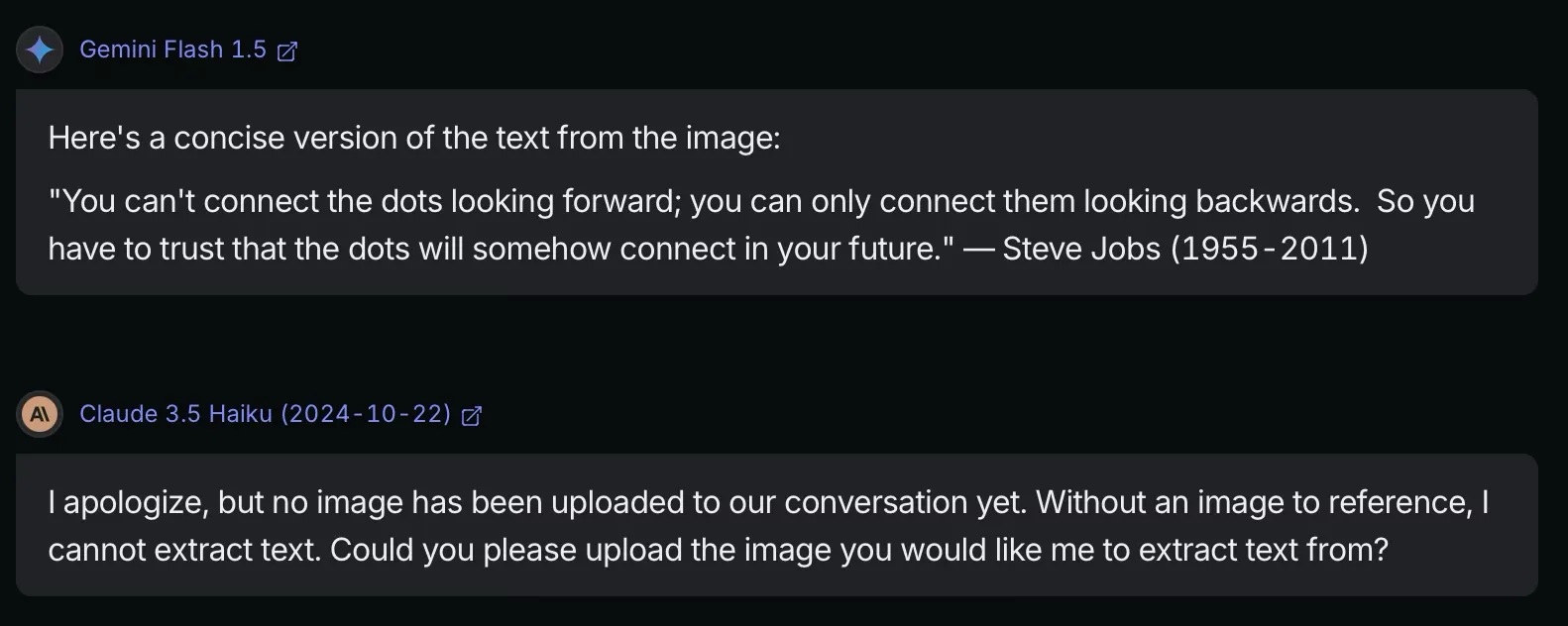

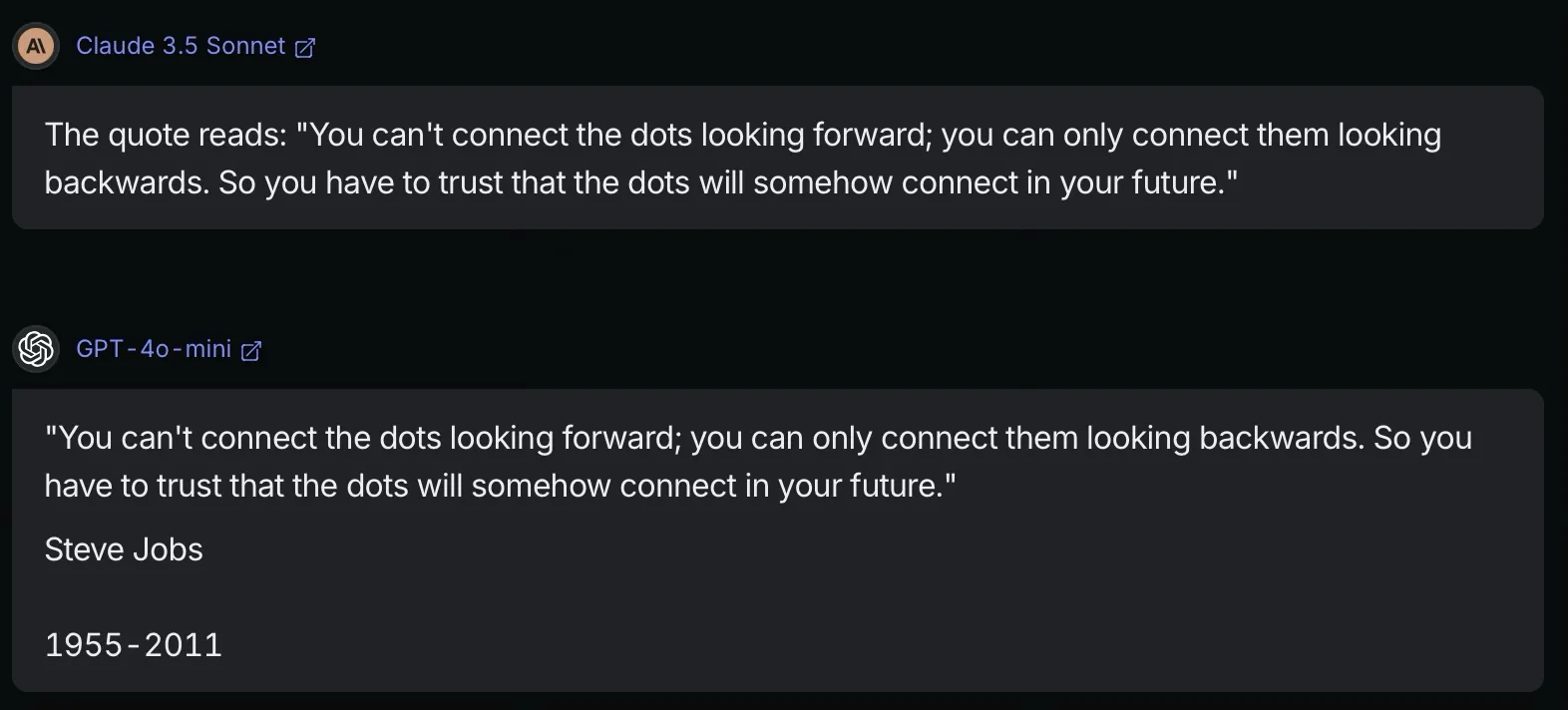

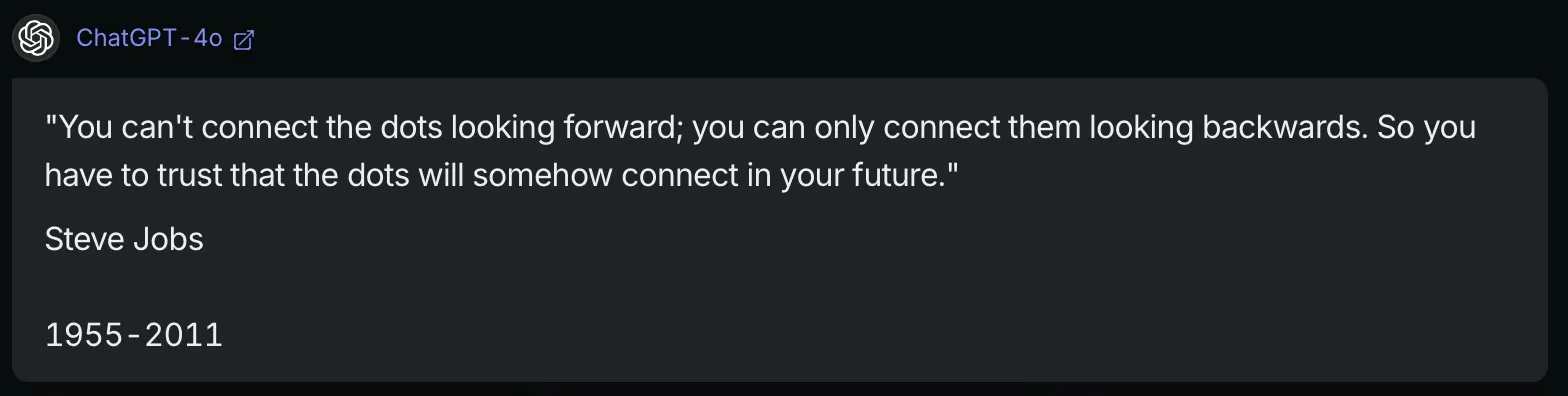

Results

Five models matched the quote exactly. Claude 3.5 Haiku returned no useful output.

- Gemini Pro 1.5

- Gemini Flash 1.5

- ChatGPT‑4o

- GPT‑4o mini

- Claude 3.5 Sonnet

Final Rankings

- Gemini Pro 1.5

- Gemini Flash 1.5

- ChatGPT‑4o

- Claude 3.5 Sonnet

- GPT‑4o mini

- Claude 3.5 Haiku

In these tests, Gemini models led both tasks. Pro was the most consistent, Flash balanced speed and quality, and others were close on text extraction.